The revelations in +972 Magazine that Israel has used two AI Systems—“Gospel” and “Lavender”—to help coordinate the mass killing of more than 30,000 Palestinians in Gaza has inspired widespread reflection and condemnation. Zhanpei’s essay looks into the origins of both this particular instance of technological slaughter and the way we reify and mystify AI systems as something outside of our own humanity. It’s a thought-provoking, urgent piece deeply rooted in both research and personal experience that I am immensely proud to publish.

—Jacob Kuppermann, Reboot Editorial Board

Ghost in a rhetorical machine

by Zhanpei Fang

The act of violence in the age of its mechanical reproducibility

I’ve been rolling the words “Gospel” and “Lavender” around my tongue recently. Perhaps you have too. It has felt impossible to look away from the catastrophe of history which is currently unfolding in Gaza; and the sheen of technoscientific sophistication given to Israel’s assault through the reported usage of these two artificial-intelligence systems to automatically identify Palestinians as targets is a terrifying and captivating thing to think about.

To quote from Yuval Abraham’s +972 exposés, while the “mass assassination factory” Gospel model focuses on marking buildings and infrastructures supposedly housing militants, “Lavender marks people—and puts them on a kill list.” An anonymous Israeli military source is quoted in the Lavender piece as saying “I have much more trust in a statistical mechanism than a soldier who lost a friend two days ago…The machine did it coldly.”1 Many of those who have raised the initial alarm at these revelations have implicitly accepted the Israeli military’s own framing around their usage of AI systems, which is a diversion even as it grabs headlines, conveniently pushing the debate towards questions of “is the AI ‘smart’ or accurate enough” when the fidelity of the system is not just besides the point but actively obscures it.

Both those highly critical of and those who support Israel’s military operations are invested in the framing of AI as something which is extraordinary and possibly magical2, the former in order to criticize the inhuman nature of coldly mechanized mass death, the latter to highlight Israel’s innovations in computational and precise ‘modern’ warfare. As an example of the former, Andreas Malm’s recent essay, “The Destruction of Palestine is the Destruction of the Earth,” calls the current carnage in Gaza the “first technogenocide,” drawing contrast to the earlier ‘low-tech’ genocides of the Bosnian Muslims and the Yazidi. While it is tempting, given the urgency of the moment, to exaggerate the novelty and singularity of the horrific war crimes we are witnessing, even critiques of militarized AI misattribute violence to the algorithm and pass over the observation that it’s impossible for an inanimate technology to have its own agency.3 By being preoccupied by the purported technical intricacies of automated war-making methods one fetishizes them, precluding any substantive critique or action.

This piece aims to identify the pitfalls in thinking about what is being called an ‘algorithmic genocide’ in Gaza. I’d like to push against the exceptionalism afforded to AI; for example pieces which set military uses of AI as distinct from previous iterations of techno-warfare. Rather, the spectre of ‘artificial intelligence’ is a reification—a set of social relations in the false appearance of concrete form; something made by us which has been cast as something outside of us. And the way in which AI has been talked about in the context of a potentially ‘AI-enabled’ genocide in Gaza poses a dangerous distraction. All of the actually interesting and hard problems about AI, besides all the math4, lie in its capacity as an intangible social technology and rhetorical device which elides human intention, creating the space of epistemic indeterminacy through which people act.

Deflating AI exceptionalism

“AI exceptionalism” describes the way in which the ‘vibes’ of AI5 lift it above the concerns and standards we typically apply to the problems these models are ‘solving’. Mel Andrews’ work on machine learning and the theory-free ideal addresses this phenomenon in the context of ML’s adoption in the natural sciences. Andrews addresses the claim that ML exists as a theory-free enterprise, on a novel and disruptive epistemic footing relative to classic statistical approaches, when it is in fact a necessarily theory-laden exercise. The data does not “speak for itself”6, neither in the context of academic research or in military applications.

Any ML model is, from its beginning, bound to a human conceptual apparatus. The considerations of its uncertainty quantification are value-laden, particularly when it comes to the question of what’s an “acceptable tolerance” to pull the trigger when used in a ‘defense’ or ‘security’ decision-support context. Every step of the algorithmic-learning processing chain is then soaked in ideology, and the rejection of the mysticism around AI/ML/DL as ‘opaque’ or ‘uninterpretable’ becomes politically imperative.7 Research into ‘fairness’ or ‘algorithmic bias’ is primarily concerned with how choices in data or algorithms may reproduce social stereotypes in systems not specifically intended to cause direct harm, for example mitigating representation bias in a model used to predict healthcare outcomes or hiring decisions, or in a generative text model which is trained predominantly on literature from a certain geographic region. This kind of research which is nowadays commonly presented at ML conferences reveals itself as woefully inadequate to the task of understanding the phenomenon at hand, that of an AI system explicitly intended to create ‘kill lists’.

Reified technology as rhetorical cudgel

If the tools commonly deployed by those ML research areas which intersect with social issues are not suited for assessing the harms of ‘AI used for warfare’, then I propose we turn to Marxist critical theory. What we should be concerned with is not the algorithm which produces targets, but the social-rhetorical machine which produces justifications. I hope to expand on others’ commentary on the recent Gospel/Lavender stories8, and provide a little more critical-theoretical scaffolding to their arguments, in thinking about AI not just as pretext but as social arrangement.

Adorno’s account of Marxian reification, as glossed by Gillian Rose in The Melancholy Science, characterizes reification as a social relation of people masked as a concrete thing.9 Technology is then something which is reified, which “appear[s] as independent beings endowed with life, and entering into relation both with one another and the human race.” The sentiment is echoed by Heidegger in “The Question Concerning Technology” (1954) when he says “the essence of technology is by no means anything technological”, and identifies technology as a means to a human end, coupling its instrumental10 and anthropological definitions: “For to posit ends and procure and utilize the means to them is a human activity.”11 The excessive pride over our domination of the natural world with technology leads to the delusion that humanity encounters itself and only itself everywhere it looks; a species-level narcissism which appears to be reflected in, for example, the hand-waving we do in order to relate artificial neural networks to the biochemical mechanisms of the human brain.

Consider the agreements between military leaders and tech executives which are speculated to have allowed the IDF’s Unit 8200 access to WhatsApp data. When Google and Amazon provide the Israeli Ministry of Defense direct secure entry points into cloud computing infrastructure as part of Project Nimbus12, show their willingness to sell pseudoscientific sentiment detection and personality analysis tools to a national government, and terminate those employees who have dared to organize against it, that is a social technology. The social arrangements and management methods13 are themselves technologies that propagate and alter social life, just as much as the ML models they muster data for. This social technology hails vast material resources, finance capital, and institutional infrastructure14, with its own supply chains and political economy.

Computer vision doesn’t learn to see like humans, but the other way around; the task of labeling vision datasets conditions our seeing. As Smits and Wevers (2021) argue, the ‘agency’ which is commonly attributed to vision datasets is produced by obscuring the power and subjective choices made by its creators and the tightly-disciplined labor of crowd labelers. When Project Maven workers click through thousands of hours of security-camera footage or drone imagery to identify potential militants (adult men of ‘fighting age’), that does not discipline a computer’s seeing but rather the human seeing of the labelers.

Due to cultural depictions and the imprecise and fantastical language we use to talk about it, we have reified AI as something outside of us, something alien and inhuman summoned from elsewhere which strains towards the phantasmagoric goal of ‘approximating’ human intelligence, and that is what is incorrect and dangerous. It is perhaps one of the most human of engineering systems we have ever built. If we want to form a coherent critique of technology, capital and militarism, it is then even more important to be clear-eyed about what we mean when we say ‘artificial intelligence’—without metaphors, anthropomorphization or alarmism—given the seriousness of the stakes.

Humans in the loop; or, garbage in, garbage out

The reification of AI, which happens at all points on the political spectrum, is actively dangerous in the context of its being taken to its most extreme conclusion: in the ‘usage' of ‘AI’ for mass death, as in the case of Gospel (‘Habsora’, הבשורה, named after the infallible word of God) and Lavender. This reification gives cover for politicians and military officers to make decisions about human lives, faking a hand-off of responsibility to a pile of linear algebra and in doing so handing themselves a blank check to do whatever they want. The extent to which these “AI systems” are credible or actually used is irrelevant, because the main purpose they serve is ideological, with massive psychological benefits for those pressing the buttons. Talking about military AI shifts the focus from the social relations between people to the technologies used to implement them, a mystification which misdirects focus and propagates invincibility.

There are things which are horrifying and exceptional about the current genocide, but the deployment of technology is not in itself one of those things; the usage of data-driven methods to conduct warfare is neither ‘intelligent’ nor ‘artificial’, and moreover not even remotely novel. As prior reporting from Ars Technica has shown about the NSA’s SKYNET program in Pakistan, Lavender is not even the first machine learning-driven system of mass assassination. I recently read Nick Turse’s Kill Anything That Moves: The Real American War in Vietnam (2013) and was struck by the parallels to the current campaign of extermination in Gaza15, down to the directed-from-above obsession with fulfilling ‘body count’ as well as the creation of anarchic spaces in which lower-level operatives are afforded opportunities to carry out atrocities which were not explicitly ordered, an observation which has also been made of the Shoah. Thinking about it in this way allows us to fold AI into other discourses of technological warfare over the past century, such as the US’s usage of IBM 360 mainframe computers in Vietnam to similarly produce lists of targets under Operation Igloo White. Using technology as rhetorical cover for bureaucratized violence is not new.16

The Lavender piece by Yuval Abraham states that IDF soldiers rapidly rubber-stamped bombing targets “despite knowing that the system makes what are regarded as ‘errors’ in approximately 10 percent of cases”. But even if the error rate were 0.005% it wouldn’t matter, because the ‘precision’ canard is just laundering human intent through a justification-manufacturing apparatus which has zero technical component. Abraham reports that “sources who have used Lavender in recent months say human agency and precision were substituted by mass target creation and lethality,” but in reality exactly zero human agency has been removed. He writes that “once the list was expanded to include tens of thousands of lower-ranking operatives, the Israeli army figured it had to rely on automated software and artificial intelligence…AI did most of the work instead”, but this verbiage is a perverse reversal of cause and effect to create post-hoc justification.

There are some useful insights to draw out of the +972 revelations about Gospel and Lavender. I was struck in particular by the quote from one Israeli military source in the former:

Nothing happens by accident. When a 3-year-old girl is killed in a home in Gaza, it’s because someone in the army decided it wasn’t a big deal for her to be killed — that it was a price worth paying in order to hit [another] target. Everything is intentional. We know exactly how much collateral damage there is in every home.

More notable than the AI alarmism is how and why the IDF might know the addresses of where every individual in Gaza lives, in order to bomb them in their private residences. The real value-add, the real alpha leveraged by the Israeli military, is the social and material infrastructure17 which, built over decades, has allowed Israel to gather vast petabytes of data18 on every granule of life in the Gaza Strip. This has previously taken shape in, for example, the Gaza Reconstruction Mechanism (GRM), which was established by tripartite agreement between the Palestinian Authority, Israeli government and United Nations in 2014 after Operation Protective Edge killed more than 2000 Palestinians and severely damaged tens of thousands of Gazan homes. The GRM’s website, previously available at https://grm.report/ and apparently taken offline sometime between 29 January and 13 March of this year, macabrely tracked each tonne of cement and rebar (considered ‘dual-use’ materials which could be potentially used nefariously by Hamas) imported into Gaza through real-time interactive data visualizations, included headings for “success stories” and testimonials from such august individuals as Ban Ki-Moon. The GRM website published submitted requests and their approval rates, demonstrating the “depth of control exercised by the State of Israel over the urban form that is to emerge in Gaza, down to the most minute architectural detail”.19 Francesco Sebregondi argues that the GRM, supported by the extremely granular GRAMMS (Gaza Reconstruction And Materials Monitoring System) online information-management database which functions in some capacity as Gaza’s ‘digital twin’, has brought forth, somewhat sinisterly, the dream of the ‘smart city’; thereby intersecting with larger critiques of the technocratic, data-intensive ‘smart city’ as implementing technologies of power. For over a decade Gaza has been visited by its colonial occupier’s fast and slow violence, under a data-driven regimen which aggressively enforces which materials are allowed to pass through a metabolic membrane, down to the calorie.

Another line from the Gospel piece reads “the increasing use of AI based systems like Habsora allows the army to carry out strikes on residential homes where a single Hamas member lives on a massive scale”. Emphasis mine—that word ‘allows’ is the hinge upon which this whole grotesque charade rests. The algorithm isn’t choosing anything; the choices already happened in the compiling and labeling of the dataset. The collecting and categorizing of data—which data on individuals’ social media or GPS movements or purchasing activity is to be used, which to be excluded—is in itself the construction of an elaborate ideological apparatus.

What criteria have the IDF established to label their data? From all accounts it seems that the ‘targets’ in their automatic target identification system are every Palestinian adult male. When a supervised learning model is given human-annotated training data which identifies all adult-aged males as possible militants in order to give each Palestinian a probability score for their likelihood of being a ‘terrorist’, this concretizes social relations into a form which can be measured and optimized.

The primary source for the Lavender piece says “I had zero added value as a human, apart from being a stamp of approval.” Is the instrumentalization of people not the sine qua non of fascism?

The purpose of a system is what it does, and science is a thing which people do

Would we say that we “collaborate” with a combine harvester to collect corn crops? Or “collaborate” with a gun to kill people, in the way we commonly talk about “collaborating” with AI to do all sorts of tasks, from creating mediocre visual art to generating lists of families to annihilate? Is an F1 score of 0.7 or 0.8 or 0.9 really a useful thing to talk about, when we talk about the decision to take a human life?

This concern with “AI warfare” does feel like a sort of logical follow-on to Eyal Weizman’s widely-circulated 2006 essay on “walking through walls” wherein he claims that the IDF draws inspiration from Deleuze and Guattari for their spatial warfare strategies; does it really matter how much continental philosophy IDF officers may or may not read? Probably not, and it would perhaps behoove us to focus a little less on the intellectual machinations of those enacting atrocities.

Five years ago in Bethlehem, next to a section of the apartheid wall decorated with a Nelson Mandela quote, I visited Banksy’s Walled-Off Hotel, where I learned, squinting at the glass display cases, about how the IDF uses experimental weapons such as DIMEs and tear gas drones against Gazans, which they then sell as ‘combat-proven’ to European and the other ‘civilized’ countries of the world. To get there my college best friend and I had passed through a refugee camp, and were stalled in traffic by the activity around a makeshift tent which had been erected in the street as a place of mourning for a paramedic shot and killed the previous morning.

Throughout my stay in her hometown near Ramallah one of my strongest impressions was of the granularity of surveillance, which saturated the air with unease; the biometric checkpoints, the aggressive and protracted questioning at the Allenby-King Hussein border crossing of a Chinese woman (me) who happened to be traveling with Palestinians. Even I was unnerved as an American citizen when the IDF soldiers had boarded the bus with their service rifles somewhere around the Sheikh Jarrah neighborhood of East Jerusalem and individually checked each passenger’s identity card. Alone because my friend was not allowed to enter Jerusalem with her green West Bank ID, I walked through the Old City with my sense of unease and looked at the Dome of the Rock through loops of barbed wire, after stuffing the paper prayer which an American Jewish friend had asked me to deliver into the cracks on the women’s side of the Western Wall.

Palestine, in many ways, stands at the end of post-war Western civilization.20 And it is particularly brutal and ironic that hypothetical concerns about ‘alignment’ are being increasingly mainstreamed at the same time that this genocide is happening—it feels wilfully blind, if not malicious. Where are the ‘AI safety’ thought leaders in this utter vacuum of commentary on Israel’s commonly-remarked-upon ‘leading role’ in ML/AI research21, and its connections to the Israeli military’s current war on Gaza in the last seven months?

I’m not trying to say that AI is in itself just a conceptual cheat, but that the mental image conjured by the phrase ‘artificial intelligence’ allows for a dangerous elision to occur, the vibes of indeterminacy attached to which create a space for slipperiness of intention, even when that intention is explicitly baked into the data-labeling step from the start. Here is what will happen if we allow this cognitive trap to metastasize, and the space of uncertainty go unclosed and unaddressed. We can expect the laundering of agency, whitewashed through the ideological device of 'the algorithm', to begin to be deployed in the arena of international law, given the ways in which Israel is already trying to sidestep the ‘genocidal intent’ it has been charged with at the ICJ. The fetish of AI as a commodity allows companies and governments to sell it, particularly Israel22, which still enjoys a fairly glowing reputation in the ML/AI industry and research world.

The epistemic problems of AI, as it turns out, are actually deadly. This is what our Bayesian world increasingly looks like—fuzzy logics, sigmoided, softmaxed, its slippery rhetoric allowing people to do horrific things to each other. And I can only hope to someday soon hold the hands of the one who calls me habibti and look into her eyes in the wreckage of modernity.

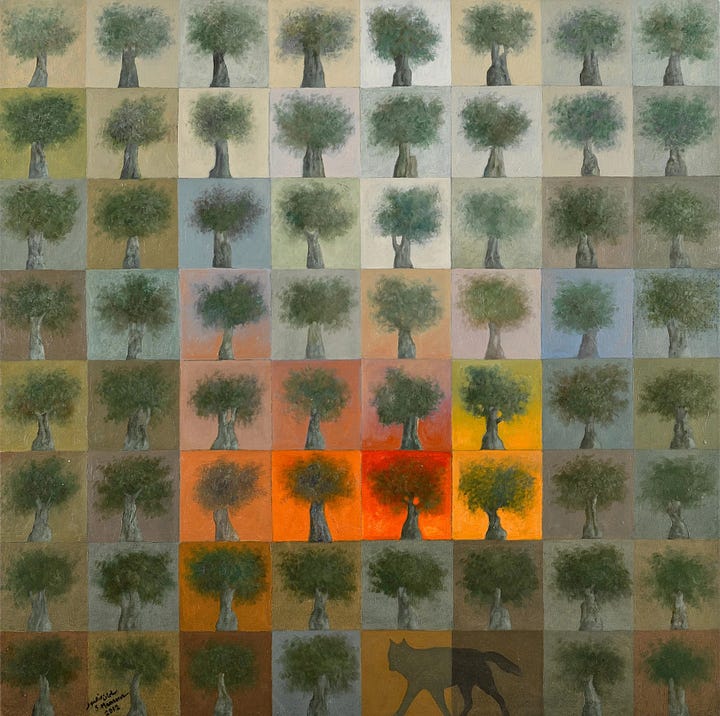

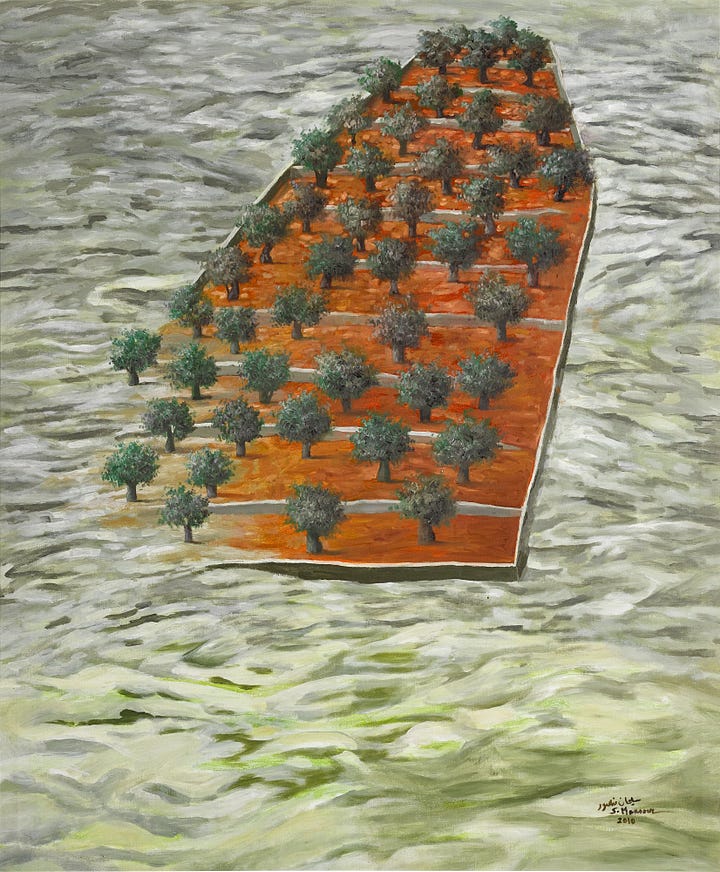

Zhanpei Fang is a painter concerned with digital visual cultures and a PhD student working on novel deep learning methods for observational sensing datasets. She is not a technologist, but she is currently a member of the Conflict Ecology and Deep Machine Vision groups at Oregon State University.

Reboot publishes essays on tech, humanity, and power every week. If you want to keep up with the community, subscribe below ⚡️

🌀 microdoses

Relevant upcoming events:

Matteo Pasquinelli’s The Eye of the Master: A Social History of Artificial Intelligence (2023) is a great social history of AI.

This story is absolutely wild: “How Alaska's little-known spaceport revolutionized military conflict”

Olivier Messiaen's "Quartet for the End of Time", written and first performed in the German POW camp in which he was imprisoned during WWII; written for third-hand instruments and musicians, chosen from what and who they could find among the prisoners.

💝 closing note

If you have pitches about tech, power, and ideology—in the context of the assault on Gaza or otherwise—we welcome your pitches.

—Jacob & Reboot team

Emphasis mine.

Refer to this excellent essay from 2018, by Jathan Sadowski for Real Life Mag: “Potemkin AI”, which among other things argues that the belief in an AI used for, for example, surveillance, constructs a panopticon whose disciplinary power is exactly as strong as people’s belief in it.

I’m taking a sideswipe here at object-oriented ontology/New Materialism; see Andreas Malm’s Verso interview where he takes down transhumanism and OOO, and Alf Hornborg’s article “Objects Don't Have Desires: Toward an Anthropology of Technology beyond Anthropomorphism” (American Anthropologist, Vol. 123 No. 4, 2021) which argues that the material or postdualist turn depoliticizes technology by naturalizing it.

As Lucy Suchman puts it while directly commenting on the Gospel/Lavender stories: “Data here are naturalised, treated as self-evident signs emitted by an objectively existing world ‘out there,’ rather than as the product of an extensively engineered chain of translation from machine readable signals to ad hoc systems of classification and interpretation.”

“The distinctness claim latches onto a real novelty in much ML deployed toward scientific ends: potential for misuse and lack of methodological standards.” Also see tweet thread.

For example, as phrased astutely by Kevin Baker in his Substack: “The accuracy, efficiency, and fairness of these systems is not the point…[they] work by mystifying questions of responsibility and agency behind a veil of technology, by subtly changing the subject…They’re machines for hiding behind, instruments of moral and legal arbitrage. As such, the normal forms of AI critique not only misapprehend the problem, but by focusing attention too tightly on technological politics, they actively help to deepen the illusion.” Kelly Weirich also points out the role AI plays in creating a false sense of authority and credibility, while alienating those people who authorize each airstrike from the targets of their violence: “Lavender offers a blank space in the midst of a causal chain of moral responsibility between genocidal intent and genocidal action, while paradoxically providing a veneer of authority for that action.” And +972 has also recently published an opinion article by Sophia Goodfriend about why “human agency is still central to Israel’s AI-powered warfare”.

In Capital Vol 1. Ch. 1 section 4, Marx refers to reification as "a definite social relation between men...[which] assumes...the fantastic form of a relation between things”. More precisely Adorno characterizes reification as a form of identity thinking, which is a relation between the universal and the particular, implying a concept is rationally identical to its object (Gillian Rose, The Melancholy Science, 59). For him reification is specifically the phenomenon in which a relation between men appears in the form of a natural property of a thing. When we say "consciousness is completely reified", we mean that it (consciousness) is only capable of knowing the appearance of society, as if institutions and behaviour as objects 'fulfil their concepts’. In a society where consciousness is completely reified, no critical theory is possible, and the "underlying processes of society are completely hidden and that the utopian possibilities within it are inconceivable” (Rose, The Melancholy Science, 62)

This observation can also be picked out, in shades, in Dialectic of Enlightenment which is concerned with ‘instrumental reason’ or ‘technological reason’, which existed in pre-capitalist society but only became a 'structuring principle' in capitalism, as well as in Eclipse of Reason (1947) wherein Horkheimer argues that in modernity the concept of reason has been reduced to an instrument for achieving practical goals assessed on its operational value, rather than a means of understanding objective truth. “For example, he sees engineers as loci of active instrumentalism” (from the SEP entry on Horkheimer). Horkheimer claims that the inexorable drive of instrumental reason results in a distorted picture which is falsely understood as the only true picture of the world (as Adorno would have characterized it, a form of identity thinking.)

Heidegger then goes into a discussion of ‘cause’ and formulation of technology as a kind of poesis, a way of bringing forth or revealing; but argues that modern technology’s mode of revealing is not poesis but a ‘challenging forth’ which transforms our orientation to the world (enframing, Gestell), converting the natural world and humanity itself to some extent to ‘standing reserve.’

See also TIME Magazine reporting: “Google Workers Revolt Over $1.2 Billion Israel Contract”, “Google Contract Shows Deal With Israel Defense Ministry”. The latter, from 12 April 2024, gave the confirmation that Google is, despite vigorous public and internal statements to the contrary, in fact providing direct cloud computing services to the Israeli Ministry of Defense, which has its own secure entry point into Google-provided computing infrastructure as part of Nimbus, as well as consulting services set to have started on April 14th. And a recent The Intercept exclusive revealed that two leading state-owned Israeli weapons manufacturers are required to purchase Amazon/Google cloud services through Project Nimbus.

Other possible examples include debt, labor unions, just-in-time production, gender roles, Fordism, Taylorism.

From the 2022 Intercept piece: “The data centers that power Nimbus will reside on Israeli territory, subject to Israeli law and insulated from political pressures.”

A more brazen and direct parallel is the designation of ‘kill zones’ in the Strip, exactly as did the United States with free-fire zones in Vietnam. “‘In practice, a terrorist is anyone the IDF has killed in the areas in which its forces operate,’ says a reserve officer who has served in Gaza.”

Again, this has been observed of the Shoah. See Arno Mayer’s Why Did the Heavens Not Darken?, which notes that “To overemphasize the modernity and banality of the killing process is to risk diverting attention from its taproots, purposes, and indeterminacies…the latest technical and bureaucratic skills were not essential to feed the fury of the Judeocide” (19), and Robert Proctor’s Racial Hygiene, and the literature (mostly in German, some in English) on Topf and Sons, the engineering firm that contracted with the SS in Auschwitz. More recently, in the drone wars of the 2010s, backed by all of the rhetorical power of 'modern' 'precision warfare' the United States saw fit to assassinate children, families, American citizens, and wedding processions. See as well Lucy Suchman’s article “Imaginaries of omniscience: Automating intelligence in the US Department of Defense”, Social Studies of Science Vol. 53 No. 5, 2022.

For more on the intensity of Israeli surveillance infrastructure in Palestine, see (to start) Darryl Li’s article on Gaza as laboratory (2006), Eyal Weizman’s Hollow Land (2007, reprinted 2017), Anthony Lowenstein’s The Palestine Laboratory (2023).

Another parallel example: the MongoDB database that was leaked in 2019 showing how much data China has been collecting on citizens in Xinjiang.

Francesco Sebregondi in the anthology Open Gaza (2020), edited by Michael Sorkin and Deen Sharp, 203. For more on the GRM, see Barakat et al.’s article “The Gaza Reconstruction Mechanism: Old Wine in New Bottlenecks”, Journal of Intervention and Statebuilding, Vol. 12 No. 2 (2018).

I enjoyed Pankaj Mishra’s essay for LRB, “The Shoah after Gaza”. Pull quote: “It hardly seems believable, but the evidence has become overwhelming: we are witnessing some kind of collapse in the free world.”

See: the discourses on Tel Aviv as the Silicon Valley of the Middle East, Israel as a “startup nation”, Towers of Ivory and Steel (2024) by Maya Wind which describes how Israeli universities are implicated in Israeli state violence against Palestinians, PACBI, Hebrew-language reporting quoting Israeli security figures’ fears regarding an academic boycott of Israel.

A TOR Project blog post summarizes a number of prominent examples of the governmental and financial arrangements which scaffold the Israeli surveillance industry and how these technologies are exported globally.

This piece epitomized why I love Reboot. I didn't agree with every point but Zhanpei's incredibly novel perspective changed how I fundamentally think about AI/ML applications even beyond warfare.

What garbage; you must be a blithering fool;; unsubscribe me from this garbage.......