Copyright is a charged topic, and conversations around it often spiral or stall. This essay traces its origins and original purpose, examines how it is straining under the weight of generative AI, and explores speculative yet pragmatic paths forward that balance innovation with fair compensation for creators. Failing to address this shift risks undermining both the economic foundation of creative labor and the quality of future models.

Thank you to the Reboot board for their thoughtful feedback and to the models that helped along the way.

—Hamidah

Pricing the Machine Feed

By Hamidah Oderinwale

“Information wants to be free but is everywhere in chains,” writes McKenzie Wark in A Hacker Manifesto. While content is now cheap, truly novel, hallucination-free work will always be expensive.

Copyright was originally designed in the 1700s to balance two interests: to protect the livelihoods of creators and to promote the public good through knowledge sharing. Authorship is incentivized by a set period of exclusive revenue rights, but eventually, works can re-enter the public domain. Some have argued that copyright stifles creative production—privileging dominant artists and corporations at the expense of new entrants—but for the most part, this legal framework has stood the test of time.

The AI gold rush, however, poses an existential challenge to the copyright regime. When an image or essay is generated by AI, who exactly “created” the artifact—the prompter, the model developer, or everyone who showed up in the training data? AI companies argue that generated content is a form of synthesis rather than copying—like a budding painter drawing inspiration from Monet. But was “fair use” ever meant to cover the unprecedented speed and frictionlessness of a prompt? If copyright is valuable, we need to figure out its place in today’s world. This is a rare opportunity to rewrite the rules for humans and machines—experimenting with how public goods, especially the products of human intellectual labor, are valued and distributed. This is a choice we must make with intention.

Answering the questions ahead calls for researchers who can devise new doctrines, engineer the harmony that can exist between creatives and model-builders; come up with new revenue-sharing models for a new era of the Renaissance economy; and engineer incentives, just as mechanism designers, a class of algorithmically-minded economists, do. Indeed, modern governance is just as much a matter of R&D as it is a matter of policy and orthodox social science.

Copyright in context

Content is a unique good: it’s non-rivalrous (i.e., my use doesn’t prevent yours) and it’s excludable (i.e., the good can be made inaccessible) through its cost. Copyright manufactures scarcity for the sake of remuneration.

Copyright seeks to balance an artist’s right to exclusivity with the public’s ability to access and reuse creative work—granting creators legal control while allowing limited, typically non-commercial, exceptions under fair use. Section 107 of U.S. copyright law establishes four factors to determine fair use: (1) the purpose of use, (2) the nature of the work, (3) the amount used, and (4) the effect on the market. Of these, the fourth factor—the effect of the use upon the potential market for or value of the copyrighted work—is increasingly at risk in today’s copyright landscape. As AI systems generate substitutes for original content, they threaten to undermine the very markets that sustain creators.

Copyright law was made for an age when most media came in print. Today, an artist’s work need not exist physically. Today’s works live on the internet to be ‘deleted’ in a heartbeat, edited without a trace, and reproduced at TFLOPs speed.

U.S. copyright law traces back to 1790, with its first major Supreme Court test arriving just decades later in Wheaton v. Peters (1834): a case where Wheaton sued Peters for copying excerpts from his court reports and lost. Yet over two centuries later, despite radical shifts in technology and culture, the doctrine remains largely unchanged even as plagiarism and copyright infringement persist in a very different world.

The last meaningful updates include the Copyright Act of 1976, extending copyright protections to ‘full’ (published and unpublished) works with a protection period of fifty years after an author’s death and establishing the concept of fair use.1

Digital copyright law at its limits

Legal scholars typically analyze decisions through historical context, but there’s little judicial precedent for digital law. The first major piece of internet-related legislation was the 1996 Communications Decency Act, followed by the 1998 Digital Millennium Copyright Act, which has become a cornerstone of many publisher-versus-platform debates. Still, amid rapid change, jurists are left stretching outdated laws to fit a generative world those rules were never meant to govern.

As it is, it appears that copyright is structurally aligned with large corporations. Individuals have the right to defend their works in court but face unfavorable odds. Comedian Sarah Silverman and author Richard Kadrey sued Meta in July 2023, but a judge dismissed most of their case by November that year. Furthermore, many artists’ claims against Stability AI and Midjourney were also dismissed in 2024. Raising a case in court doesn’t guarantee a win, and plaintiffs are left uncertain of an outcome for months, maybe even years, while you suffer the costs.

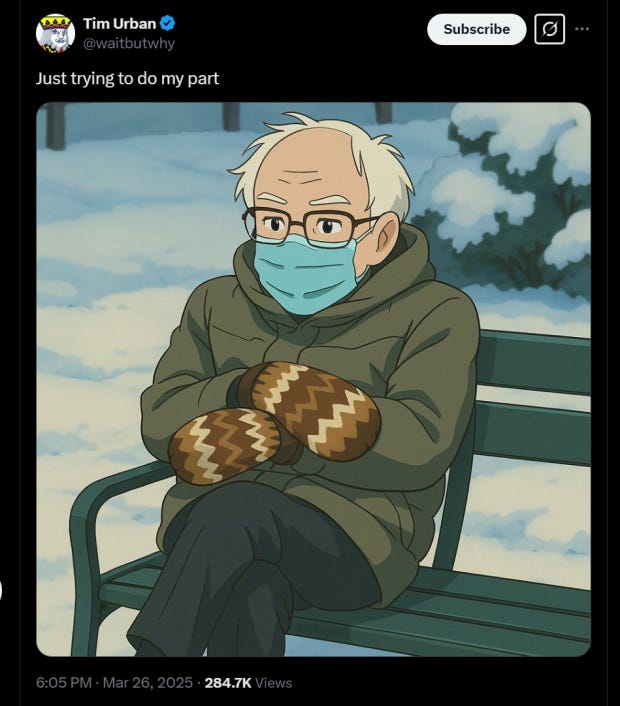

Indeed, the line between copying and inspiration has always been blurry. In machine learning, developers call copying “memorization.” In literature, “plagiarism.” Take OpenAI’s recently launched 4o model: users can turn their photos into Miyazaki-style images. When the reference is a cultural phenomenon or simply obvious, the output feels like an homage. But what happens when a prompt produces a close copy of a lesser-known artist’s forest scene?

While attribution has become a fuzzy property of generative content, the law is about drawing clear lines. Yet compensation now sits in legal, moral, and technical grey zones. Modern law will rely on systems and procedures to determine where imitation ends and original creation begins. Artists shouldn’t have to gamble in court just to prove they matter.

Creative cannibalism

Artists often say that innovation is just recombination. Though the origins of the quote are debated, the line “Good artists copy; great artists steal” is often attributed to Picasso. But even if art involves copying, it still depends on a foundation of original work.

Without copyright—or a functional replacement—we risk a similar collapse in the creative ecosystem. As AI makes up a growing share of the U.S. economy, some argue that protecting creators is a naive concern when national competitiveness is at stake. But consider the scenario where we do away with copyright protections: Without financial reward, putting the artifacts of your unique insight into the public domain seems fruitless. Producing novel content becomes a hobbyist’s affair. As the human knowledge repository dwindles, models generatively recurse and deteriorate. Innovation stagnates, less basic research is produced, and cultural production narrows. What we gain in short-term data access, we lose many times over in (human) insight and creativity.

Your work is taken, tokenized into “lexemes,” recursively fed to models and dissolved into generative output. This fuels “model collapse,” a phenomenon where the limitations of generated content (e.g., hallucinations, homogeneity) are amplified until outputs go off the rails. Your work is no longer yours. You can’t make a living. You decide to paywall it, knowing fewer people will see it.

In years’ time, we would find ourselves with no human-generated content left to feed our models. Synthetic or model-generated data has been cast as a solution, but we've also gotten evidence that we’re better off when we keep human insight in the mix. After all, synthetic data needs its ground truth.

Disrupting the value-chain

LLMs aren’t independently generating novel content—they rely heavily on existing work. So while these statistics shouldn’t be cause for alarm on their own, they do highlight the fragility of our current systems for compensating content creators—who, in many ways, are also the data producers fueling these models.

Copyright was conceived as arms for the underdog. It was conceived to empower “authors, artists, and scientists to create original works,” claim ownership over them, have the right to consent, and do so even in the face of powerful corporations. At its core, copyright is about rewarding labor through compensation and preserving the power to grant or withhold permission. When authoring new work, we should start with our ‘why’ and ensure this pillar remains intact.

Model developers can charge for access, but individual creators, forums, sites, and platforms rarely have the infrastructure to be similarly compensated. When you pay for a pro subscription or an API token, you’re effectively receiving a Creative Commons Zero (CC0), public domain-style license for the model’s outputs—free to use, even commercially. If AI’s value is augmenting human capabilities, humans and their works are similarly deserving of remuneration.

Feeding the machine, rewarding the feeder

What might come next? As public data dwindles, AI labs are increasingly turning to private content—scaling up at hidden costs. Even if the goal is to accelerate model capabilities, bypassing copyright may yield more high-quality training data today but offers no guarantee of quality data tomorrow. If copyright can’t keep pace, we need alternative systems that reward creators without stifling innovation. From algorithmic royalties to data cooperatives, new models are emerging and some are already gaining traction while others remain more speculative.

Learning from platform economies

To help us conceive of such systems, we can start by examining existing approaches. Take The New York Times writer, who earns a salary but in return gives up ownership of their work. Then, compare them to the independent blogger who is left to represent themselves. Should training data from employees benefit their employers while independents are left on their own?

Historically, creators have fought for a fair share of platform-generated revenue. Substack is now home to the internet bloggers who might have otherwise stayed on personal sites, relying on Buy Me a Coffee or Patreon. Similarly, being a musical artist is synonymous with being on Spotify where you’re paid in recording and publishing royalties. But these platforms have long undervalued creative labor. Consider Spotify, which has made it increasingly difficult for artists to earn from recorded music, pushing them toward merch and live shows instead.

As more organizations like Vox Media and companies like Reddit strike content-licensing deals with AI firms, will platforms share the resulting profits with the people who generate the content—or more precisely, the data—they rely on? Some may form creator collectives to bypass corporations while still benefiting from collective power. For both, the next challenge is ensuring platforms share profits fairly.

Platforms will need to develop clear metrics to assess the value of content in training datasets. This might resemble Google’s cost-per-view model, where compensation rates reflect content’s uniqueness and relevance to specific applications. The rarity or uniqueness of data inputs should also be factored into pay-rates. Another challenge is judging authenticity at scale. The Coalition for Content Provenance and Authenticity (C2PA) has rallied tech heavyweights to try. Now it’s time to see whether tools like digital watermarks and persistent metadata will hold up.

Are paychecks enough?

So far, financial security alone hasn’t been shown to dramatically boost creative output. In 2024, OpenResearch—with support from Sam Altman—released their Unconditional Cash Study, a large-scale Universal Basic Income (UBI) experiment and their findings didn’t suggest that UBI in a post-labor world would be as fruitful as one might hope. The results didn’t agree with assumptions that with UBI more let people pursue their passions instead of being tied to their 9-5s, and share the outputs of their passion projects in the public domain.

Admittedly, cultural resilience wasn’t the project’s focus. Future studies might consider the generative creator economy. But not all output is equal: meaningful work often prioritizes depth over volume, demanding effort and originality. This raises the question: what is the metric for care, and is it worth measuring? To add, do developers with more disposable income do more open-source work (like responding to more queries on the programming advice forum, Stack Overflow)? And how many more publications do grad students with generous stipends and voluntary assistantships write?

Headhunting for datasets

Perhaps we’re in a new age of the commissioner and the curator. But instead of picking works to go into physical galleries, we have curators picking works to go into datasets. Unique collections of rare, digitized, and annotated works to feed models that perform specialized tasks for particular people. It’s then the job of the curator to pay for the works they choose to put in their collections. There’s already a number of data provider companies like Scale AI, but it may be time for one whose moat is their taste—infused with a renaissance flair.

There are caveats: First, under 17 U.S.C. § 103, a collection of public domain works aren’t copyrightable on their own, even if they’re uniquely arranged. This top-down method pays artists indirectly and often disproportionately.

The tractability of distributive democracy

New models depend on corporate structures, often at odds with individual empowerment. Decentralized tools like blockchain offer creatives more control and profit, but they risk forging a new technical elite.

Through Data DAOs (Data Decentralized Autonomous Organizations), individual data producers—like a Reddit user with access to their own consumer data—can track how their contributions are used and get paid accordingly. But to steelman: how many Redditors even know this is an option? Solutions like these often feel built for a technical minority, rather than the broader public they’re meant to empower.

I’m less convinced that app users’ data should be the focus of compensation. Rather, what would it look like for creators of unique artifacts—authors, researchers, artists—to be paid by the platforms or publishers that host their work? Personally, I care more about the labor behind writing a book than my user data on Instagram being sold to advertisers.

Assigning the tickets

Every major undertaking needs clear leadership—but who will take charge? It’s tempting to place full responsibility on large AI labs, but accountability is difficult to enforce from the outside. Creatives deserve to focus on their work, yet they remain the most invested in protecting their rights. Governments hold power, but leadership is temporary. Startups move fast without long-term mandates, and nonprofits often lack the infrastructure to scale. So where does oversight come from?

We need domain experts bringing their knowledge and experience into the large labs—and it will take actively steering researchers into these neglected waters. Hal Varian, Google’s Chief Economist, is a prime example. Before joining Google, he was a professor at UC Berkeley specializing in mechanism design and information. He was recruited in the early 2000s and spearheaded Google’s ad auctions. His trajectory shows that the theory need not exist in a vacuum.

In a 2024 NeurIPS talk, OpenAI co-founder Ilya Sutskever (now building Safe Superintelligence Inc.) claims that we should defer to the government to ensure AI progress goes well. I’m optimistic about policy as a lever for change—and I’d go so far as to say that any plan to reshape society without government is ill-informed. But it’s equally shortsighted to ignore the actors actively building the systems we hope to govern.

A new renaissance economy

In the absurd—but increasingly plausible—world imagined by some, where AI systems dominate the labor market, we must decide how to meaningfully value human labor and creativity. If generative models absorb and replicate human output, then compensation becomes one of the last tangible ways to assert dignity, authorship, and agency. Money may be an imperfect proxy, but it shapes the decisions at society’s core.

Fair and durable governance requires more than technologists or policymakers; it needs broad and accountable participation. Looking ahead, we need to design systems that compensate human input, preserve creativity, and hold AI accountable to the public—work that demands the diverse expertise of those helping to train it.

Reboot meets our readers at the intersection of technology, politics, and power every week. If you want to keep up with the community, subscribe below ⚡️

🌀 microdoses

Alex Reisner’s writing about “Books3, a collection of 192,000 pirated e-books being used by Apple, Meta, Bloomberg, Nvidia, and other companies.”

“Copyright for Literate Robots” by James Grimmelman (2016)

Inventing the Future: Postcapitalism and a World Without Work by Nick Srnicek and Alex Williams (2015)

Maybe everything can be computer. Mechanize, a new startup founded by staff from Epoch AI, is trying to automate work—and they’re hiring.

Watermarks might make it easier to catch corporate leaks.

More on the struggles of creatives by Lucas Gelfond and Jasmine Sun

“The Uneasy Case for Copyright: A Look Back Across Four Decades” by Stephen G. Breyer (2011)

💝 closing note

If you’re interested in any of these questions or are thinking about similar things, reach out (hamidah@joinreboot.org)! If you’d like to write for Reboot you can also use our pitch form.

—Hamidah & Reboot team

Fair use is a legal construct that permits limited use of copyrighted material without permission, typically for purposes like commentary, criticism, or parody. It depends on whether the use is “transformative”—adding new meaning or expression rather than simply copying. But what counts as transformative is deliberately vague. Like free speech, its boundaries were left open to interpretation, leading to decades of legal ambiguity and expensive court battles. In practice, fair use functions less as a guarantee and more as a defense—one that often favors those with the resources to fight.