It’s been approximately three years since the launch of ChatGPT vaulted “A(G)I” into public consciousness. No coincidence that, around the 2.5-3 year mark, a bunch of AI books have now hit the market…. Jasmine, Jacob, Shira, and I talk through as many as we can get to in this long(! sorry) podcast. In reverse chronological order:

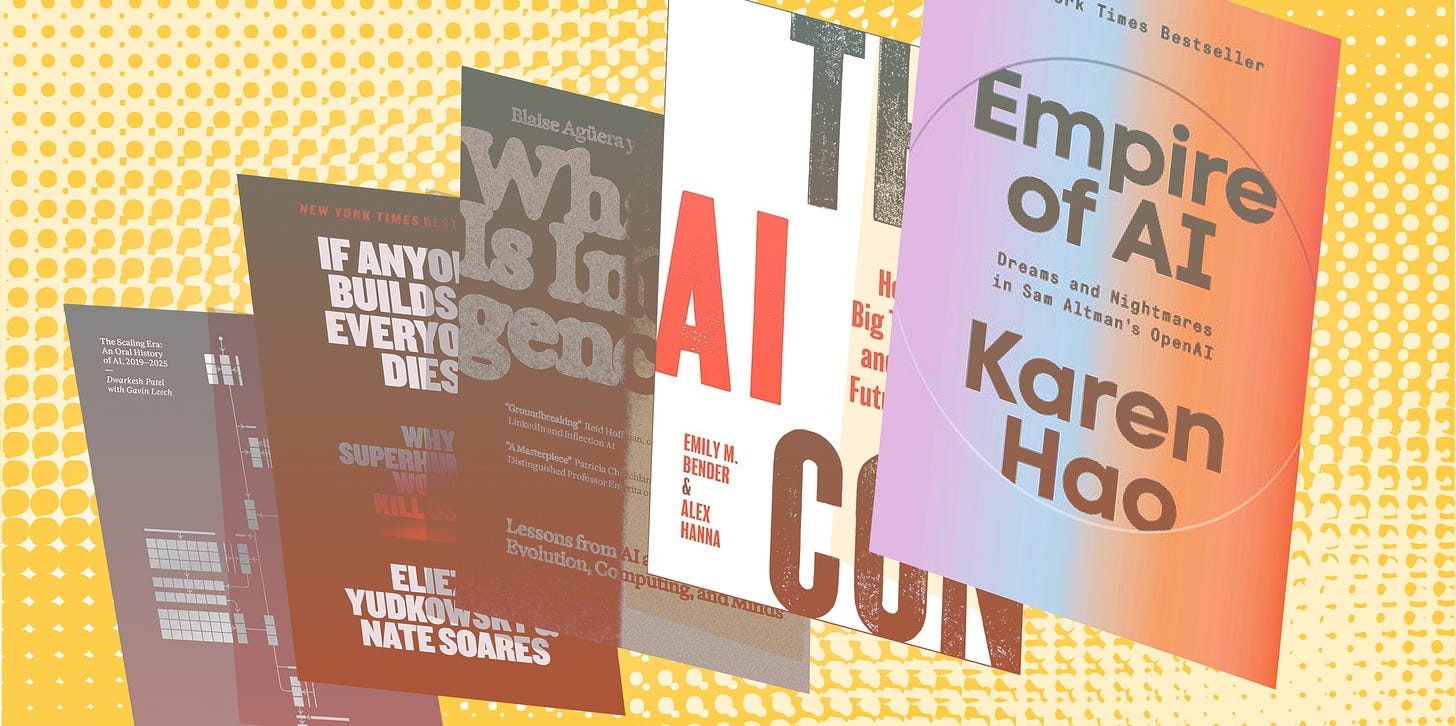

The Scaling Era: An Oral History of AI, 2019-2025 by Dwarkesh Patel and Gavin Leech (October 2025)

If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All by Eliezer Yudkowsky and Nate Soares (September 2025)

What Is Intelligence?: Lessons from AI About Evolution, Computing, and Minds by Blaise Aguera y Arcas (September 2025)

The AI Con: How to Fight Big Tech’s Hype and Create the Future We Want by Emily Bender and Alex Hanna (May 2025)

Empire of AI: Dreams and Nightmares in Sam Altman’s OpenAI by Karen Hao (May 2025)

An abridged transcript is below, or jump to the bottom of this post to get our “buy/borrow/skip” (spoiler: unfortunately, most people will probably only find around 1.5 books worth reading). As always, audio version is more than a little spicier than the transcript.

The Scaling Era – “A primarily aesthetic object”

Jessica: This book is a compilation of a bunch of interviews that [tech podcaster] Dwarkesh has done with various guests over the last few years. What I didn’t realize before reading it — because I thought it was just gonna be a staple of all of the podcasts — is that they actually sliced up all of the podcasts by subject. So the first chapter’s about scaling, and there are other chapters about alignment, interpretability, whether takeoff will happen. All of the conversations are snipped up and rearranged by topic instead of by person. They also added a bunch of footnotes and margin annotations for various technical terms. So on any given page, there’s notes for here’s what attention is, here’s what a transformer is, and there’s little footnotes for additional commentary, throughout the whole book.

Jasmine: I am curious how the form factor is to read, with the sliced-up interviews.

Jessica: I’m so glad you asked, because I was trying to be very neutral, and just what was happening, but the actual experience of reading it was, well…. I respect the vision, but I think it’s hard to execute coherently. They’ll be talking about one thing with one person and then immediately jump to a different topic with a different person, and it’s not obvious why there was a transition there. Or, you might have a conversation with one person that’s not about alignment and not about scaling, but about how scaling interacts with alignment. And different chunks of this conversation are pasted in different parts of the book, but you can tell they used to be together, and I wish I could have just read the one conversation.

Overall, it feels like a primarily aesthetic object. How many people who buy this book are going to read it from back to back? I don’t think very many because... the people who are buying this book are probably Dwarkesh listeners. And if you are Dwarkesh listeners, then you’ve already consumed this content.

Jacob: The format reminds me most of a very different kind of history of a scene, which is Meet Me in the Bathroom, which is this famous history of indie rock in New York City around 2000, when bands like The Strokes or the Yeah Yeah Yeahs were blowing up. And it’s just a bunch of quotes from people, all put together vaguely into one narrative. And I think even though [Dwarkesh’s] book is probably slightly more coherent because it’s interviewing computer scientists rather than a bunch of indie rock musicians, it might be similar in that it kind of captures some part of the spirit of the moment, but it also feels slightly incoherent as a book.

Jessica: Yeah, it’s weird because there’s a ton of citations, but there’s obviously no fact-checking. Well, except there’s this one part when he’s interviewing Francois Chollet, who’s famously a deep learning/ AGI skeptic, and Francois is like, “There’s no way these models will ever be able to accomplish X task.” And then there’s a footnote that’s like, “This task was accomplished in some month in 2024.” So there’s some selective fact-checking happening.

But by and large, it’s just a podcast where people are just saying shit, and none of it is actually verified. My experience of reading most of this book was that I was reading it aloud in the car to my partner [also an AI researcher] as we were on a road trip. I was sitting there in the passenger seat, reading this aloud to both of us, and pretty regularly — like once every page — I would come across something where I was like, “I’m pretty sure this is not true. But I’m not sure if I can actually verify this.” Then we would discuss it and we’d be like, “It’s probably true enough that it’s not totally, totally off, I guess this is just a podcast so we can’t ask for more….”

So I think that’s one of the weaknesses, and it’s frustrating because at the end of the day, it’s podcast transcripts — so on the one hand, they’re not really pretending to be something more rigorous than that, but on the other, they still are trying to signal something about rigor, with their footnotes and citations or whatever, and that makes me want to judge more harshly.

Mark Zuckerberg comes across as the most intelligent and thoughtful person in this book — and it’s not like I have very warm feelings about Mark Zuckerberg in general. He doesn’t have a very large part in the book, but the one place he does show up is the alignment chapter where Dwarkesh keeps trying to ask about existential risk. And Mark is just reiterating, “We have lots of near-term risks to focus on, they are a big challenge, and we as a company are very focused on getting that right.” And I was like, “Wow, Mark, great thoughts.”

Jasmine: I am sure Meta is clearly doing a lot of work to reduce both short and long-term AI risk right now, as we can see from all their product releases.

Jacob: This is also very funny because I feel like when Mark Zuckerberg actually went on that podcast, wasn’t that when he was talking about how the average American has fewer than three friends, but demand for 12 to 15 friends or whatever? Which I think a lot of people were not hugely a fan of….

Jessica: That’s the other content-wise problem with this book — I mean, it’s just a problem with whatever Dwarkesh chose as material for the book, which is very much focused on the big shiny, “number go up,” AGI becomes as smart as an AI researcher and replaces all the AI researchers. But there is no discussion about literally any other type of harm. There’s a bunch of Leopold [Aschenbrenner] in here saying AGI is basically a nuclear weapon, therefore, geopolitics. But there’s no conversation whatsoever about literally any other possible bad thing that could come about from building AI or scaling it. I think that’s a missed opportunity. But again, it’s just not his beat. So what can you do?

I guess the big question is, if you are an AI researcher, why are you listening to this? And maybe it’s just to hear what your CEO thinks.

Jasmine: That’s an interesting question because people in the AI research community do listen to the podcast, and it does set debates and discourse cycles, right? The Rich Sutton interview or the Karpathy interview where everyone’s sort of, “Okay guys, it’s time to debate RL scaling.” Or “Okay guys, do we actually all update our timelines longer?” Or, “Does continual learning matter?” And there clearly is something that people in the research community are getting from it and the podcast does matter. I’m trying to think through what that is.

Shira: It sounds to me like it’s partially gossip. It’s community, in some sense; people want to have a shared conversation, and this is just one of the touchstones.

Jacob: Yeah. It reminds me of when Pitchfork publishes an album review — God, I’m going to a lot of music metaphors today, sorry — or The Cut publishes a big salacious essay about polyamory or whatever. A lot of the debate is not about the particular text of the article or the text of the review. It’s just signaling, “Okay, let’s all have a big online fight about this topic.” So I feel like people talk about, “Oh, this person was on Dwarkesh to talk about X topic,” and then they talk about X. They don’t really get into the depths of specific claims... they aren’t pulling out a particular sentence and doing exegesis on it, which is probably for the best.

If Anyone Builds it, Everyone Dies – “won’t waste too much of your time”

Jasmine: First of all, I think the title is so funny. If Anyone Builds it, Everyone Dies is a very inelegant title, but it tells you a lot about the way that Eliezer Yudkowsky thinks. He is the father of the “rationalist movement,” which is all about things like Bayesian reasoning and trying to have fewer cognitive biases. And I’m pretty sure the reason that they called the book that is because they were like, “What is the title we can pick so that every time someone says the name of the book, they state our main thesis exactly and it is impossible to misinterpret?” You can learn a lot about the authors and their goals through this incredibly clunky title.

Eliezer is one of the most influential thinkers in the field of AI safety; he’s been talking about the potential for AI to become super powerful and then misaligned with human values or human survival for decades now. And in fact, his writing on AI and how powerful it would be is actually what inspired a lot of the core AI founders to get into the field.

One compliment that I can give to this book is that it’s actually less than 300 pages long. I read it in a single evening. It was full of weird parables, but they were all very short. And it really is, compared to everything else he has ever written about AI risk or rationality or anything else, a quite accessible read that won’t waste too much of your time. So I appreciated that about the book.

I think that it also makes a solid effort at explaining some technical concepts in AI for what is intended to be a very, very lay audience — they are running ads in the New York subways. I do think that there is a lot of value to a book that takes concepts like gradient descent and RLHF and model weights and just tries to explain them in pretty basic ways, and also give some analogies to why these problems are difficult.

The basic thesis is that AI models are “grown, not crafted.” What that means is when you train a model, it has trillions of parameters and weights; when you do gradient descent, you’re just tuning a bunch of numbers at once, and they don’t really know how the model works internally; they just know whether it does better or worse on some slate of benchmarks.

Because it is so hard to precisely control model behaviors and adjust very specific parts of the model behavior, what that means, according to Yudkowsky and Soares, is that the model can develop “wants.” This is the part I struggle with a lot. It will “want” things that the researchers or the model developers don’t want. And they define “want” in this weird way. Sometimes you can give an AI a goal and it will find very strange ways to succeed at the goal. And so, even if you expect it to play a game a certain way, it might actually play the game a wrong way. (A classic reward hacking example is a cleaning robot that simply knocks over a bunch of stuff in a room just so that it can clean all the pieces up and score cleaning points.)

They go a step farther and say, not only does the model sometimes take weird routes to achieve the goals that its developer set, but actually that the model can develop “wants” of its own that are totally alien to wants that the humans may set. And then because the model has developed wants of its own, such as “be really smart” or “all the GPUs” or some other alien wants that we don’t desire, it is plausible that a super-intelligent AI could then do things like start to recursively self-improve, acquire GPUs for itself, pretend to be a really well-behaved model while secretly hacking to the computers of everybody at the company and everyone around the world. And then eventually, somehow accidentally in that process, kill all of humanity because it needs to harvest all our power and resources for its own weird gains.

I think that personally, way too much of the book is spent explaining a very detailed scenario of how a super-intelligent model would then achieve the goal of killing everybody once it has arrived at the goal of, “I want to kill everyone.” I don’t find it extremely plausible, but I can see how a powerful model that had bad goals could wreak a lot of havoc. I agree with that.

The part that I take a lot of issue with, and that they spend a very tiny amount of time proving, is the thing about “wants.” I am really unclear how a model ever develops persistent wants that are separate from the ones that its developers gave it, that are not just, “Oh, it misunderstood the vague intentions of the developers and it found an alternate route.” I think Yudkowsky and Soares’ argument requires them to prove that the model can have long-term persistent desires across instances, across versions, across and space where all of GPT-6, GPT-7, no matter who’s running it or where it is, has its own alien goal. They don’t really spend any time explaining, and that is the part where I start to go, “Hmm.”

And then the other thing is the notion that we only have one shot. A lot of the book is: ASI will either be powerful and smart enough to kill us all or it won’t be. And so at the point when ASI is powerful and smart enough to kill us all, it will just kill us all. And we don’t get to, quote-unquote, “try again.” This to me, is still in that sort of old model of ASI/AGI where we either have it or we don’t — the nuclear weapons model. We either have a nuke or we don’t have a nuke. And once we have a nuke, everything’s really bad. And if you set it off, it’s gonna be really bad. But before we have the nuke, we just have no idea when or if we’ll be there.

My sense is that this is not an accurate description of the way that AI capabilities and progress look now. It is fairly plausible to me that we would see models that are capable of almost killing us or causing a lot of harm that will start to hack systems or develop wants or whatever else. And then we could observe that and, yes, some harm would be done, but we could observe it and fix things and slow down at that point, rather than Eliezer’s world where we’ll have literally no clue that the model is about to kill all until one day it crosses this mysterious threshold and it does. When again, there are a lot of models with different amounts of capabilities. The models are very good at some things, very bad at others. And I just don’t think that there’s some magic trip wire where all of a sudden it’s gonna kill us all.

Even with nuclear weapons, part of the reason we were able to have nuclear arms treaties is because a nuclear bomb did go off twice and everyone saw it and was like, “Yeah, that was super bad and we don’t wanna do that anymore.” Which is a fact that, despite going through a lot of random nuclear weapons history, Eliezer completely ignores. So you would think that that would be a useful thing to mention, which is, “In fact, you will see some harms that you should pay attention to and care about before you have the big thing or whatever.”

Shira: For a book that’s about course correction, it seems like they really don’t believe in course correction.

Jasmine: I mean, the only form of course correction they believe in is stopping. They’re just like, we should nuke and/or threaten to nuke the datacenters.

Jacob: Some people get mad when you say that he wants to do airstrikes on data centers, ‘cause they’re like, “Oh no, no. He actually only wants to do airstrikes on data centers if the data centers are in violation of treaties.” It’s like, yeah, that’s not actually a modification of the first thing you said.

Jasmine: To be precise, he says we should airstrike the data centers if and only if they’re in violation of the international treaty that says not to do any AI research anymore.

Jessica: The treaty that definitely exists.

Shira: And that would totally be enforceable and followed.

Jacob: We’re very good at international treaties.

What is Intelligence? – “A Noema-ass book”

Jacob: Blaise Agüera y Arcas is a VP and fellow at Google, and the CTO of the Technology and Society Group. He did a lot of computer vision stuff in the 2010s. I think perhaps uniquely among the people that we’ve looked at books from, he’s an actual, working AI guy.

It ends up being a very weird, heady book. It’s 600 pages long and it is all about creating a unified theory of what intelligence is, both in biological life and artificial forms of intelligence. He’s really trying to make a hardcore case for a functionalist view of intelligence, where as long as it achieves sort of the same functions across different implementations there, it’s essentially the same thing.

The fundamental thesis, which emerges around 250 pages into the book — it’s not a book that has a clear airplane-book style thesis that it gets to directly — is that “theory of mind is mind.” The rapid explosion of intelligence that we saw emerge in humans is motivated by our desire to more accurately model the minds of both other humans and then the rest of the world around us, for both purposes of competition/ predation, and avoidance of predation, as well as for cooperation between humans. His argument is that we are starting to observe these patterns emerge among artificial intelligences.

He goes through all the various points of the argument very well. I think this book would be good even if you didn’t have that much understanding of either the biology and the history of life on this planet, or the history of computing. But it is a bit confusing what the “so what” is of it. It’s like, “Okay, yeah, yeah, yeah. I get that this is important. I get that this is a major evolutionary life transition, the same way that the development of agriculture was for humanity.” But for a book that asks “What is intelligence?” it doesn’t quite answer it in a super coherent, “I walk out of the book knowing what intelligence is” kind of way, which is funny ‘cause it sort of feels like a failure if your “What is intelligence” book doesn’t quite answer what intelligence is.

Jasmine: What does the book say about AI?

Jacob: That’s the other thing about it — for a book from a guy who has been working directly in AI... it didn’t really have anything particularly notable to say about AI other than through the metaphors and drawing equivalences with the evolution of life on this planet. And maybe that’s the overarching take, that evolution of biological life on this planet was super strange and took all these unpredictable terms. Therefore the same may apply to our development of computational life. And also, maybe, life is computation. If there is something, it’s the strongest case for a functional understanding of intelligence. Instead of just saying, “Ah, it looks the same and it passes the Turing test, therefore it is the same,” the book is saying that there are core functions and roles that intelligence plays. And we can see our computational systems beginning to emulate these things and try to achieve these same goals. So therefore we can say, “Oh, this is how it’s converging upon intelligence in the same functional way.”

It certainly moved me more towards believing in that mode of thinking about intelligence. But again, because it’s such a chin-strokey type of book, I’m not sure if it’s trying to hard convince me in any particular way other than, “Oh, let’s think more about this and not just rely on the gut feeling takes.”

Jasmine: 600 pages is a lot for that.

Jacob: Yeah. I think that I probably would find this tedious if I were not reading it for an “assignment.”

Jasmine: I’m pretty curious to read the op-ed version of this book, which maybe already exists, like if it was an essay in Noema or something.

Jessica: He has a lot of Noema essays, actually.

Jacob: Yeah, it’s a very Noema-ass book. And also full disclosure, I lightly edited an essay of his for Long Now’s website. And that is also a short (long-for-an-essay) version of the book.

The book goes on for a long time and I found it charming enough, but I’m not sure if it feels like “the important AI book of this moment” — even though it’s clearly trying to be, at least for this specific Benjamin Bratton-coded, heady critical theory-heavy STS world. It’s trying to be their version of the “important” book. ‘Cause every camp needs a book right now.

The AI Con – “2016 Democrat-core… for readers between John Oliver and Bluesky.”

Shira: The book is basically what it says on the tin: it’s a takedown of AI hype. The general thesis of the book is that “AI,” as such, is a con. And it is a con that is designed for the bosses to replace your labor, or to replace human labor with machines, or to avoid investing in social safety nets, and just broadly to make things more shitty — I think they do use the term “enshittification” in the book. In general, it is just about that. It is bringing up a lot of case studies and examples of how using AI systems to replace existing professions or replace or try to augment social services, or education, for instance, is really misguided and bad.

I am a person who is of the STS persuasion; I’ve read a lot of Alex Hanna and Emily Bender’s other stuff. And I think I broadly agree with them on a political economy level — that these systems, as they’re used rhetorically, do serve to erode a lot of social fabric and social safety nets, and kind of create cover for that political project.

They are both very involved in this project of kind of decentering AI safety, furthering AI ethics, and broadly being against AI hype, which they see as mostly a rhetorical trick to promote investment in “AI,” as they would say, in LLM research that they don’t really see as leading anywhere good, and mostly an austerity project. It presents a lot of important case studies as to why and how the use of LLMs to replace core functions in society is bad.

So yeah, the book really makes the case that AI is a con. AI is bad. Does it convincingly make that case? I’m not totally sure because I’m not really the target audience.

Jessica: Who do you think the target audience is?

Shira: I think the target audience is a lay person. My best description of this book is: Weapons of Math Destruction [by Cathy O’Neil] for 2025. I think it’s designed for someone who doesn’t have a lot of technical knowledge. It’s for the public, it’s for policymakers, it’s for people who are maybe not technical. I am not sure, though, how effective it would be as a persuasive book for someone who does know something about the issue. Partially because I found the prose extremely grating.

I would describe it as 2016 Democrat-core, really invested in name-calling in ways that I just didn’t personally find to be productive. And so even though there’s a lot of really good stuff in this book, I was just kind of peeved throughout. Here’s an excerpt that is maybe the most concentrated dose of it:

We will sometimes use the shorthand abbreviation of “AI”. We want to keep a critical distance from the term: every time we write “AI”, imagine we have a set of scare quotes around it. Or if you prefer, replace it with a ridiculous phrase. Some of our favorites include “mathy maths”, “a racist pile of linear algebra”, “stochastic parrots”(referring to large language models specifically), or Systematic Approaches to Learning Algorithms and Machine Inferences (aka SALAMI).

One other that they didn’t mention here, and that they use a lot is “text extruding machine,” like extrusion as in the process of making a paste into pasta, or something.

Jacob: Like die-cut.

Shira: Yes, that kind of gives you an idea of name-calling that is at play throughout this book. That is part of how they argue we should engage with the technology, which is by ridiculing it.

Jasmine: Would you give this to your parents?

Shira: Yeah, I actually would. My mom read Weapons of Math Destruction and loved it. I might even give it to my dad who is a technical person in other ways, is a mathematician but doesn’t know very much about these. I think it also kind of depends on whether you think the person will be amenable to the name-calling… like the kinds of people who like to use name-calling against Trump might enjoy this.

Jasmine: Oh, yeah. Like the clever No Kings signs, or people who like the “tiny hands” meme.

Jacob: A Bluesky-ish audience?

Jasmine: Between John Oliver and Bluesky.

Shira: Exactly. Again, I’m in the weird place of kind of agreeing with a lot of their arguments, but also disliking the prose and the actual argumentation. So I wouldn’t mind for people to read this book. I just thought it was really annoying to read….

Jessica: I wonder if it’s useful to have this sneering tone, making fun of the AI people in a very clear, directed way, almost hatred…. Is that a productive orientation for the median reader to have towards either the technology or the people building it? I worry that people that might otherwise be receptive to the argument are turned off by the tone. I’m tone policing, I guess.

Jasmine: I think tone policing is good. A book is a communicative artifact, you’re not just hanging out with your friends.

One thing I think about — and I have not read this book, to be clear — is the original Stochastic Parrots paper. I did read that, and on the one hand, I think many of the claims in the paper are fair claims. LLMs do, unless you really RLHF them hard, spread a bunch of biases that are found in the training data. I think that these are real concerns. The thing that I feel like was a primary result of that paper though, other than Timnit getting fired, was that news articles for the next two years would be, “LLMs are just stochastic parrots,” therefore they’re useless and they don’t think and they don’t do anything.

And personally, one of my beefs here is, it’s not that an LLM in no way represents a stochastic parrot. I understand where that comes from. But the thing is, that name-calling makes it seem like the LLMs are very stupid. But in certain ways — again, in narrow ways, but in certain ways — LLMs are very capable. And in fact, if the thing that you care about is trying to show how they are threatening people’s jobs or something, I personally think it’s actually not effective to be like, “Oh, there are these stupid little toys that don’t even work and don’t even think.” But no…. The reason that people are trying to replace large segments of the workforce with AI is because they are actually pretty capable of some tasks. And the way that the name-calling makes it seem like a tiny little toy, it makes it harder to reckon with the scale of the issue at hand.

Shira: Yeah, I mean the odd thing about this book is that it tries to do both. Talking about these systems as vastly incapable of what they’re being sold as being capable of, but also rhetorically being used to replace jobs. But they’re not really talking about why it is that, beyond rhetoric, that people think LLMs capable of doing these things. And that I think is a missed opportunity.

Empire of AI – “an incredibly deeply reported book… that could have been more focused”

Jasmine: Karen Hao has been reporting on AI for quite a long time, especially the language model side; I think her first big investigative piece about OpenAI for the MIT Tech Review came out in 2018 or 19, which is a lot earlier than a lot of journalists have been covering the topic. So one thing that is cool about her book is that it builds on many years of deep reporting on these questions, on the development of the very first large language models at OpenAI, about things like the coup, which I think is reported in a great deal of detail. How was Sam Altman kicked out of the company and by who and why?

Her big claim, that she follows across OpenAI, Anthropic, Scale, and a bunch of different companies, is that as much as these people make claims about mission and openness and whatever else they’re interested in, in the end, the real thing driving these decisions is basically a desire for power. And particularly, a few men’s desires to be “the guy who does it.” And each of these guys, Dario, Sam, Demis, whoever, is sort of: “You guys are doing AI the wrong way, but I will do AI the right way.”

If you look at questions like, “Why did Ilya try to fire Sam Altman?” Karen will make the case that, “Yeah, it’s actually just because he was mad that this other guy, Jakub, got promoted over him and he didn’t get as much compute.” And it’s these selfish little power battles that are really what’s moving around these giant amounts of money. And probably we should disregard all of the high-minded discourse to focus on that.

This is a case that is made also quite a bit stronger by the fact that it is just an incredibly deeply reported book, where you have enough dialogue and play-by-play about specific events and behind-the-scenes of these big corporate dramas that you can really see how, yeah, egos and power-seeking are an incredible amount of what is going on in this industry. So I think it is probably one of the strongest, if not the strongest, reporting works of all of the books that we’ve been reading. And that is a testament to both her ability as a reporter and the fact that she’s been following this for a long time.

My personal critique of the book is that I think it kind of relates to what Shira is saying about The AI Con. On one hand, I’m actually reasonably sympathetic to the idea that a few men’s big egos drive a lot of this stuff way more than any missions they may or may not think they have. At the same time, Karen Hao does have a pretty clear personal position against AI and LLMs. She has said on podcasts that she also is of the position that it is wrong to use a large language model, that there is no ethical way to use a large language model. And because of the environmental impacts, which she covers in the book, people should not be using them at all. She goes on pretty long analytical, commentary-style tangents, where you’ll be reading a bunch of deep reporting about some corporate history and then it’ll be some personal analysis of these power dynamics.

[Editor’s note: One of Hao’s big claims about water usage was recently found to have been off by a factor of ~3 orders of magnitude, and error resolution is ongoing.]

I don’t know, it just jumps around a lot between, “Here’s what’s happening in the OpenAI boardroom,” “Here’s my personal experience as Karen Hao being blackballed by people,” “Here’s what Timnit’s up to?” “Oh, now we’re looking at some data workers in Kenya.” And the jumpiness and the combination of reporting and personal commentary did make it a bit hard for me, A, to follow. And B, I think it hurt the credibility of the reporting because it did give you a very clear sense that there was a strong political agenda driving the book. And I think it’s okay to write political agenda books. For example, If Anyone Builds It, Everyone Dies is very obviously a political agenda book.

But the thing is, I think Karen’s actually a good enough reporter that she could have done zero of the very clear, “Here’s my personal beef with these companies,” and people would’ve gotten the point, and in fact been receptive to it. Because as is, my sense is, for example, in Silicon Valley among people at the AI companies, this book has lost a ton of credibility, because the sense is that Hao is an ideologue trying to wage her personal campaign against these companies for being mean to her after she did some reporting. And I think that’s a bit of a shame because I think there is some good stuff in this book that I think people should read.

I think it could have been more focused. There’s a lot of pages given to the Annie Altman story, or the Timnit Gebru story. Not just her firing, but also a bunch of other pages in other places about what she’s up to these days. The book ends with the academic AI ethics community and it being seen as a hero, as in, “Oh, here is the good version of what this stuff could look like.”

Maybe this just sort of betrays my own positions, but I didn’t feel like either of these needed to be as many pages as they got. I think that the Timnit Gebru firing story from Google was a pretty big deal and should be covered. But at the point where it started to feel more like a hagiography of the AI ethics heroes, or the anti-Sam Altman people…. “Annie Altman is a perfect character, Timnit Gebru is a perfect character, we’re gonna go on all these tangents to celebrate the real heroes of the story.” That’s where it started to feel to me like there was an agenda beyond, “Let’s cover a different history of what is actually going on at these companies.”

I didn’t feel that those parts were necessary. I think it could have been better as a more of a straight reported book, though I could see people disagreeing on that and thinking that the personal point of view is useful. I personally think the book would’ve been more effective if it was a more focused narrative on the key power players in AI and how they made a bunch of decisions.

Jessica: What is the good version that she’s selling at the end of the book?

Jasmine: It ends with, basically, “How do we decolonize AI?” So therefore, “Let’s look at Maori AI, let’s look at Queer in AI workshops, let’s look at DAIR.” And that is where it ends; basically, academic AI ethics is the hero of the book in the end.

Jessica: I just... yeah, that’s really uncompelling to me. These are... everyone always points to the Maori AI example. Also, I don’t know, the whole book is about how much money and power the industry has. So I don’t really understand what... what just doing some more critical academic research does about the power thing. That makes me sad actually, that that’s the ending.

BUY, BORROW, OR SKIP?

The Scaling Era: I think if you are already a Dwarkesh fanboy, you should buy this book because really, it’s very beautiful. I think it will signal to people who come to your house that you enjoy thinking about AI, and perhaps that you read. Anyone else should probably just either listen to the podcast or read a different book. – Jessica

If Anyone Builds It, Everyone Dies: I actually will say skip it. I think that this book would make your understanding of AI safety worse. Read some Holden Karnofsky blogs or Paul Christiano blogs or something. I’m actually not a hater of all things AI safety, but I don’t think this book is either well written, nor is it the most nuanced and in my opinion, likely version of how AI risk happens. – Jasmine

Yeah, I was originally leaning borrow, just because it’s short and it’s easy to read and it’s useful to understand what’s going on in the brain of Eliezer Yudkowsky, I guess. But there is so much text by him that is freely available in much shorter doses on the internet that I’m not sure if you’re actually getting anything additional from reading this particular version of it. And yeah, I would rather read the Cold Takes blog from Holden. – Jacob

What is Intelligence?: I lean towards buy, mostly because if you borrow it from someone you’re gonna be reading it for so long that you’re gonna really annoy the person that you’ve borrowed it from, or the library system that you have withdrawn it from. It also is a beautiful book, so it’s a nice item to have on your shelf for just pointing out and being like, “Look, I’m reading a book about the fundamental nature of intelligence.” I’m not sure how enjoyable the reading is gonna be if you aren’t someone with a lot of time and at least a decent baseline curiosity in a lot of different, semi-related topics. – Jacob

The AI Con: I’m gonna do a secret third thing, which is to gift to people like your parents who could use a primer that doesn’t get too far into the technical details, and kind of gives an introduction to the sort of political valence of using LLMs and so forth. I personally would’ve skipped in favor of the podcast or other writings. I would not send this to anyone who’s not a resist-lib. – Shira

Empire of AI: People interested in the recent history of the AI companies should probably buy or borrow. My personal take is that it is worth reading, but if you find yourself in one of these chapters or long tangents about a thing that you don’t really care about, just skip those pages until you’re back to the thing you do care about. – Jasmine

🌀 microdoses

💝 closing note

If it’s not obvious, I am so, so, tired of the AI discourse circus, because I think it’s almost all bad. Because I enjoy suffering, however, I am also always hoping that The Next AI Discourse is good. If you think you’ve got something… pitch us!

—Jessica & Reboot team