One of the many reasons I decided to join Reboot was to write about how tech sees art and how art sees tech. The algorave, where artists write code to create music and images in real time, was an obvious candidate for my first contribution to this publication, but it also presented me with an opportunity to sort out my own conflicted thoughts about the scene. After going to as many algoraves as I could and chatting with members of the live coding community, I can’t tell you how to feel about live coded music, but I hope this piece gives you new ways of listening to, looking at, and participating in algoraves.

Show Us Your Screens!

By Hannah Scott

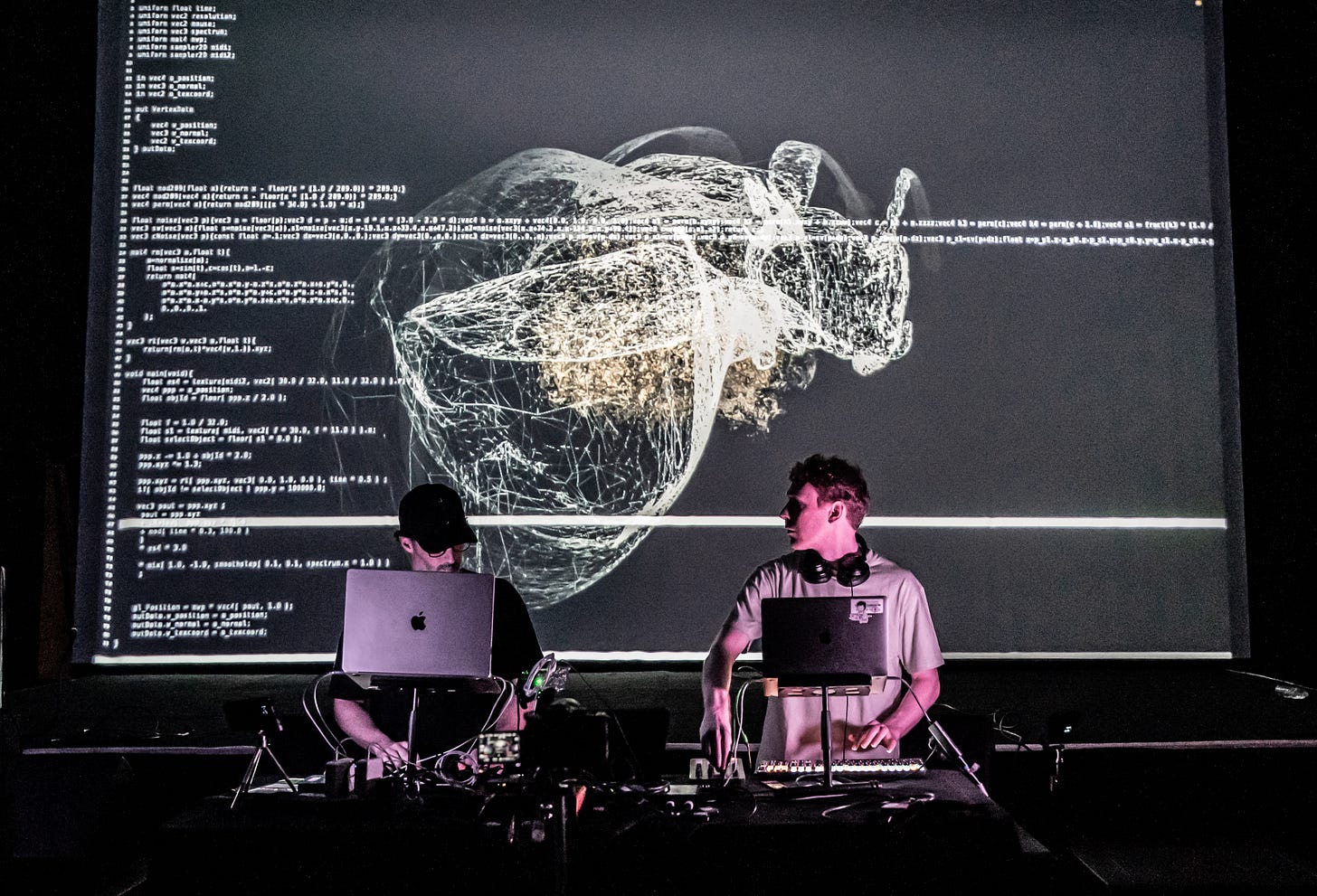

Dancing to music made with code didn’t come naturally to me at first. While the artist co-op’s open concept floor plan provided ample surface area for moving my body, I spent most of my first algorave facing the pair of programmers writing code on their laptops to generate music and visuals, my feet glued to the poured concrete floor. I wasn’t alone; the rest of the audience, mostly clean-cut 20-30-somethings with bike helmets dangling from backpacks, also swayed politely as they trained their focus on the lines of code projected onto a white wall. The performer, fingers racing across a glowing pink keyboard, would change a variable labeled “tempo” from 0.4 to 0.6 and, lo and behold, I’d hear the pace of a synthesized kick drum quicken. I couldn’t fully parse the esoteric commands these live coders typed to their computers (“struct ‘1(<3 5>,8,1)’ $ perc”), but I grokked that “sample :bd_haus” introduced a four-on-the-floor bass drum beat into the track.

The term “algorave” has come to describe this scene, while “live coding” describes what performers at this kind of show do. Clustered around nodes like AV Club SF (which hosted the first algorave I attended in 2021) and Live Code NYC, live coders generate music or visuals by writing code in real time. In a typical set, two performers will compose lines of code on their laptops standing side by side, one artist responsible for sound and the other visuals. Algoraves aren’t defined by particular sonic qualities, though glitchy dance tracks with steady bass lines and ambient electronic soundscapes are commonplace. I left that first algorave feeling that the music sounded programmed, which indeed it was.

At the same time, I had started to tire of other kinds of electronic music experiences that, just a few years ago, had thrilled me with a machinic sound that seemed to mirror my technological milieu. I sought out basements and warehouses to lose myself in techno’s steady yet punishing pulse, feeling that these experiences enfolded me into my generation’s version of an authentic underground scene. But eventually, I couldn’t remember why I wanted to stand shoulder-to-shoulder with other partygoers, all facing forward, to watch a DJ turn knobs. Mixes on internet radio stations all started to sound like the same saccharine mash-up of pop edits. It seemed that club culture had become burdened by nostalgia, endlessly replicating Y2K-era digital aesthetics. I still found glimmers of freedom while dancing in redwood groves until dawn, but electronic music’s space of possibility—particularly the art of performing it live—felt more and more condemned to derivatives of its past.

I didn’t immediately feel that algoraves offered a panacea for my musical malaise, but I saw something I didn’t see elsewhere. There was an earnestness in live coders’ efforts to hack together new ways of making things with computers—the speed with which live coders architected intricate while loops, the cheeky comments programmers left in their code to implore their audience to dance, the unpretentious manner with which artists explained their process to inquisitive attendees. I suppressed the jaded critic in me and decided to give algoraves a chance.

While computer-generated music is nearly as old as computers themselves, “live coding” emerged in the early 2000s among a loose group of London art school students. Burgeoning algorithmic artists like Alex McLean and Adrian Ward had been experimenting with what they termed “generative music,” or music made with code, though not necessarily written live during a performance. The possibility of coding music in real time largely emerged from a technical affordance—programming languages like SuperCollider had begun to introduce the ability to alter a program as it ran. Rather than letting this capability languish in a long list of unassuming version updates, early live coders imbued on-the-fly programming with a revolutionary fervor. In 2004, early live coders christened their growing community TOPLAP and, holding true to their art school bombasticism, issued a manifesto. Much as 90s candy ravers rallied around “PLUR” (peace, love, unity, respect), the algoraver’s creed outlines a set of ideals to be instantiated in the practice of live coding. Unlike the stated values of most other do-it-yourself music scenes, though, TOPLAP and its tenets not only outlined a particular mode of creating music or art, but also of relating to computation.

One of the TOPLAP manifesto’s incantations for proper application of computer code declares: “Obscurantism is dangerous. Show us your screens.” While programming languages, visual motifs, and musical styles vary from algorave to algorave, projecting code for the audience to observe is such a mainstay within the scene that it has become a de facto requirement. By turning over the means of algorithmic production to the audience’s gaze, live coders demonstrate a version of software that puts its algorithms front and center, rather than obscuring them behind attention-harvesting feeds, sleek UI features, and chatbot interfaces. Whether an audience, especially a non-technical one, is able to parse meaning out of the drum loops and notation sequences, though, is questionable—perhaps the presence of uninterpretable code further mystifies the algorithm.

But for live coders, showing the screen is not just a gesture toward opening black boxes; it’s also a means by which these artists externalize their own improvisatory processes. Watching an algorithmic musician compose drum loops and bass lines to serve as scaffolding for hi-hats and synth flourishes to come, one observes the artist’s thought process laid out in semi-readable text. Where a freestyling piano player translates her sonic ideas into notes on a keyboard, a live coder will phrase her ideas in algorithmic instructions that the computer translates into sound. In some ways, programming music is less like playing an instrument than having a conversation with a computer. Algorave’s practice of transparency is a way to make that conversation visible to the audience listening to its outputs, even though they may not be able to fully understand the thoughts exchanged between live coder and machine.

Sitting around a dining table at the same co-op that hosted the first algorave I attended, I asked AV Club SF members R Tyler and Nathan Ho about their introductions to the scene. Both, to my surprise, started making music with code before ever having seen algorithms performed live. Like many other members of the live coding community in San Francisco, R Tyler and Ho learned to play piano as kids and developed software engineering skills in college. When they learned about idiosyncratic programming languages for making music, they immediately began tinkering.

For both R Tyler and Ho, making music with code marries their traditional music training with their programming chops; their philosophies of performing programmed music, however, differ greatly. Ho prefers to write code offline, record its output, and then play the resulting tracks live much like a DJ might. For Ho, programming on stage results in music that evolves too slowly—one can only type out do/while loops and variable declarations so fast. On the other hand, R Tyler splits the difference between on- and offline music programming by preparing libraries of melodies and drum beats ahead of a set. On stage, R Tyler will transition between these prepared chunks, adjusting BPM or adding syncopation in response to the energy in the room. The immediacy that Ho finds lacking in most live coding sets emerges, for R Tyler, in that connection to the audience, in the ability to describe or demonstrate through projected code what changes are being made in real time.

Ho and R Tyler agreed that one of live coding’s most attractive features is the ability to construct one’s own instruments. Live coders not only craft lines of Haskell or Javascript, they often craft the raw materials employed in live coding performances: programming languages, development environments, and other sonic implements. Some live coders, as Ho and R Tyler point out, fall into a “system building trap,” meaning that they become so enmeshed in building tools that they never actually get around to performing. This trap ends up servicing the broader live coding community, as the majority of its programming languages, including SuperCollider, Tidal Cycles, and ChucK, are free and open source. The breadth of tools developed within the live coding community reflects the diversity of approaches and practices live coders bring to performance. Hydra allows a user to generate analog video-looking visuals in the browser, while Strudel ports the logic of Haskell-based Tidal Cycles over to JavaScript.

Building their own tools from scratch also allows music programmers to circumvent some of the implicit rules of engagement baked into other tools for electronic music-making. While the digital audio workstation Logic accommodates an array of different music-making processes, its linear timeline interface requires users to stick to chronological music conventions. But with Tidal Cycles, a popular live coding language, a user writes “patterns” which they can initiate in any order, at any time. The blank slate of an integrated development environment may allow live coders to dispense with constraints imposed by other instruments, or at least decide which constraints they prefer, but there are some music conventions that persist even in the greenfield pastures of software development.

elekhlekha อีเหละเขละขละ (Thai for chaos, dispersedness, entropy) is a Bangkok-born, Brooklyn-based duo composed of Nitcha “Fame” Tothong and Kengchakaj “Keng” Kengkarnka, whose audiovisual performances break the expectation that Southeast Asian sound cultures must be “traditional.” Keng, whose musical training was rooted in a Western jazz piano tradition, began using code to produce music that reflects Southeast Asian sound cultures during the pandemic. He quickly found that his years of training hadn’t prepared him to properly replicate tuning systems that diverge from Western music’s equal temperament. Making music with algorithms allowed Keng to start unlearning the musical norms that had become muscle memory—with a neat declaration of a variable to represent a specific frequency, Keng could insert a new note into an octave.

Nevertheless, there’s little room to move beyond the 2:1 interval ratio, that golden ratio of Western musical harmony, in Keng’s live coding language of choice, Orca. But for Keng, as for most live coders, the possible sounds one can create don’t have to be reducible to the affordances of an instrument, whether a keyboard or a Eurorack synthesizer or a programming language. To break these limitations, Keng and Fame built a custom virtual gong ensemble that assigns multiple frequencies to one sound, meaning that each time the digital instrument is played, it sounds a bit different, more nuanced—something closer to the non-Western sound cultures they want to share with their audiences.

I asked Keng whether writing code feels similar to playing piano, or if the two constitute entirely separate modes of creative thought. He paused for a beat, then offered that while writing lines of code is becoming increasingly intuitive, there’s still more of a delay in getting from idea to musical notes when he writes code than when his hands waltz across piano keys. But by continually confronting that gap between intent and execution, Keng is gradually undoing the aural bias that once made non-Western tonal systems sound “out of tune.” That break between idea and execution, that delay which represents a process of translation, is where the conversation between live coder and computer happens. At first they sounded like erroneous glitches, but eventually those delays became the thing I sought out in algoraves.

Decriers of programmed music typically criticize its lack of “human touch,” its perfectly timed, machine-like quality. Yet, it is becoming increasingly easy to make similarly “perfect”-sounding music without even learning how to code. Newly released generative AI products like Suno can produce entire songs from natural language ideas in mere seconds. The tracks I can generate with prompts like “synthwave minimal” or “driving techno Berghain” may not sound all that different from some of the music I hear at algoraves. Both are generated with algorithms of varying complexity, and both retain an audible mark of their computational production.

But the goal of algorithmic music is not to expedite music creation, which is the thesis of products like Suno, but to perform the process of making music with code. A good algorave performance asks more of both the performer and the audience, not less. To make those performances interesting or surprising, live coders introduce various forms of complexity into their performances, whether through custom tools for performing non-Western tuning systems, chatbots that explain blocks of code to audience members, or even AI models that convert large data sets into sound. This performative mode of conversing with computers presents an alternative to more conventional modes of engaging with technology, which work to ensure computer-ness recedes from view, where labor is expended on getting the computer to talk more like humans rather than asking humans to figure out how to talk to computers.

Commercial AI products like Suno also claim to democratize music-making, eliminating the need to learn any new craft. Live coders also seek to make electronic music production more accessible, but elevate process over output. They argue that accessibility need not correspond to expedited production of familiar music; rather, this democratization should open up new channels of experimentation. All you need to live code is a laptop, but that low barrier to entry is an invitation to construct something novel.

Algorithms have become something of a cultural pariah, but algorithmically produced music is on the rise. Live coding continues to spread across the globe, land on university syllabi, and populate festival lineups. Artists like DJ_Dave and Lil Data have toured with JPEGMAFIA and Danny Brown, signed onto influential labels like PC Music, and accumulated tens of thousands of monthly Spotify listeners. Yet, while they welcome the wider attention, most live coders I’ve spoken to don’t view mainstream success as a goal in itself. They don’t want to see every Boiler Room set become an algorave nor every NTS spot be produced with code. Instead, they hope their scene’s success pushes the wider electronic music scene to experiment with new ways to perform with computers.

At the most recent algorave I attended, an artist began their set by typing a comment in the editor that read # gonna try writing this from scratch lol :) Layer by layer, they built up a high-BPM, bass-heavy techno set. The performance wasn’t without its glitches—the track fell completely silent at some points, leaving me to wonder if the artist had accidentally commented out the active block of musical code. But I’d learned by this point that the audible results of a live coding set are really just one piece of the experience. It's by learning to pay attention to each artist’s idiosyncratic approach to real-time experimentation, glitches and all, that algoraves become more than the sum of their lines of code.

Hannah Scott is an editor at Reboot. She would like to thank R Tyler, Nathan Ho, Rodney Folz, Nitcha “Fame” Tothong, Kengchakaj “Keng” Kengkarnka, and Dan Gorelick for conversations that helped shape this essay.

Reboot publishes free essays on tech, humanity, and power every week. If you want to keep up with the community, subscribe below ⚡️

🌀 microdoses

I was recently delighted by live coder Char Stiles’s “fantasy IDE,” a kinetic visual display featuring a dozen browser windows bouncing around like DVD logos.

In non-algorithmic music news, I’m a latecomer to ML Buch’s Suntub (2023), but it’s a lovely example of playing with non-standard tuning schemes.

I checked out Trevor Paglen’s solo exhibition, CARDINALS, at Altman Siegel in San Francisco. The landscape photography peppered with UFOs are apparently undoctored—and I Want To Believe!

💝 closing note

If you’re a live coder or an algorave-goer, I would love to hear about how your experiences align with or diverge from my own. I’m also always looking for pitches that bring audiences into nascent artistic and cultural phenomena — drop your ideas here!

See you on the dance floor,

Hannah & Reboot team